10.2 MODULATING LONGEVITY AND RATE OF AGING: CALORIE RESTRICTION

The popular press is filled with stories of how specific foods and nutrients extend life span or increase health. Life spans of 130–140 years have been reported in individuals claiming that their long life can be attributed to special diets, such as diets consisting of apricots, yogurt, and “special” breads. Personal testimony on the life-extending and antiaging benefits of vitamins E, A, B 12 , and C appear regularly in books and popular magazines. But rigorous scientific evaluations of individual foods and nutrients reported to extend life have been unable to demonstrate any life-span extension or specific health benefit beyond that associated with alleviating a particular deficiency (BOX 10.1). Only a reduction in caloric intake, commonly referred to as calorie restriction (CR), or dietary restriction (DR), has been shown to extend both mean and maximum life span.

| BOX 10.1 FROM MYTH TO SCIENCE: THE HEALING AND HEALTH POWERS OF FOOD |

|

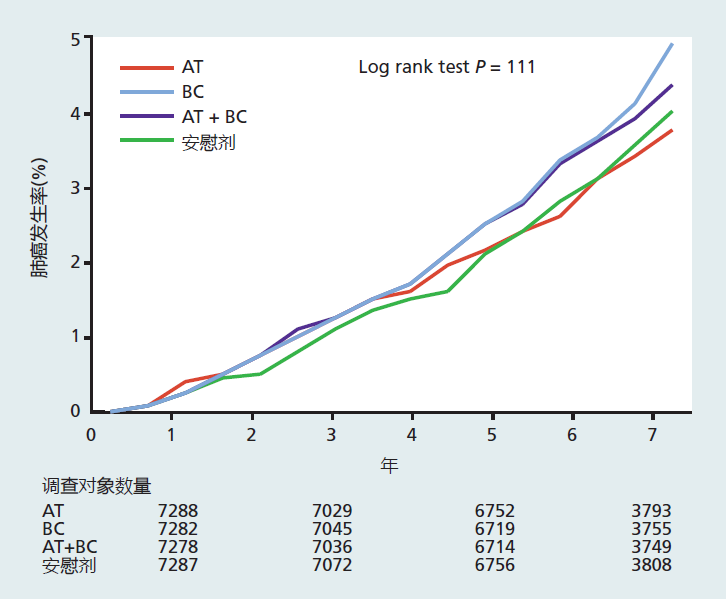

Rigorous scientific investigations have conclusively shown that low-calorie diets slow the rate of aging and decrease the risk for many age-related diseases. However, even with the overwhelming evidence of the benefits of a low-calorie diet for health and aging, we live in a time when almost 70% of the American population is overweight or obese. Clearly, the majority of Americans are having difficulty accepting the indisputable scientific evidence that low-calorie diets are a simple and low-cost way of improving health, delaying agerelated diseases, and slowing the rate of aging. Americans spend billions of dollars per year on individual foods and food supplements with supposed health benefits, as promoted through personal testimony, oversimplification of basic biological mechanisms, and misinterpretation of valid scientific results. A current Web-based advertisement by a food supplement company lists 40 benefits of wheatgrass, including, “Chlorophyll is the first product of light and, therefore, contains more light energy than any other element.” In other words, eating wheatgrass will give you the energy of light. Wow! At present, there is no reliable or complete scientific evidence showing that any single food or nutrient will, in itself, prevent, delay, or cure disease or slow the rate of aging. Why are we so easily persuaded by outrageous claims about the health benefits of food when the scientific evidence shows otherwise? The complexity of human survival and health psychology provides many answers. One of the more important factors would certainly be that the health and healing powers of food are deeply rooted in our cultural and religious traditions. It was not until the late nineteenth century that safe and effective medicines, as we know them today, began to appear. This means that humanity has had only a little over 100 years to separate food from medicine, an extremely short time in which to adopt a cultural change. Here, we briefly explore the origins of naturally occurring food as medicine and how science has begun to separate the two. Food as medicine “Let food be thy medicine and medicine be thy food.” These words were written by Hippocrates of Cos (460–370 BCE), widely regarded as the father of Western medicine. Hippocrates's therapeutic method was based on a close relationship between the natural world and the human body. According to the Greeks of his day, all matter, including food and the four bodily humors (phlegm, blood, yellow bile, and black bile), was composed of four elements (earth, air, fire, and water), and an imbalance of these elements caused the abnormal functioning of matter, including bodily functions (disease). Hippocrates's method was to diagnose which element was out of balance and then prescribe a food with a high concentration of the opposite element—earth, air, fire, or water—so as to put the body back in balance. The Hippocratic method, with its emphasis on food, established the direction of Indo-European medicine, a method that would last until the Enlightenment of the eighteenth century. The noted Roman physician Galen (129–200 CE) relied heavily on Hippocrates when he compiled his writing on medicines into a multivolume set, On the Powers of Food. Galen described how properties in grains, fruits, vegetables, and some animal flesh have specific uses in curing disease. He suggested that food should always be the first step in treatment, followed by drugs, and surgery as the last resort. With the adoption of Galenic medicine by the Roman emperors, food as medicine became the standard medical treatment throughout the empire. The rise of Islamic medicine in the Arab and Persian cultures came after the fall of the Roman Empire, and this also relied heavily on food as medicine. The famous Islamic physician Ibn Sinã (Avicenna) translated the medical writings of Hippocrates and Galen into Arabic and added his own techniques—techniques that would be the basis of Islamic medicine for centuries. Ibn Sinã suggested that the healing powers of food were Allah's gift to humanity. This prophetic statement would prompt some Islamic clerics to suggest that maintenance of health through food was one way of achieving holiness. Traditional Chinese medicine, known as shi liao, most likely got its start during the first millennium BCE with the writing of the Huangdi Neijing, also known as The Yellow Emperor's Classic of Internal Medicine. Like Hippocrates, the Huangdi Neijing placed prime importance on keeping the body in balance. Food was classified into cold, wet, hot, and dry and was used to oppose disease through the concept of yin-yang, the belief that there are two complementary forces in the universe. The Huangdi Neijing stated, “If there is heat, cool it; if there is cold, warm it; if there is dryness, moisten it; if there is dampness, dry it.” Yin foods are those that are considered cold and wet, such as fruits, seafood, beans, and vegetables; these decrease energy and increase the water content of the body. Yang foods are hot and dry foods, such as ginger, onions, alcohol, and most meats, which increase energy and decrease the water content of the body. The energy-enhancing effect of hot foods was based on the idea that yang foods contain significantly more fat and protein than yin foods and thus have greater energy content. Traditional Chinese medicine remains active throughout China and other parts of the world. The Western concept of food as medicine began to change with the rise of the scientific method during the Enlightenment. For example, invention of the microscope provided evidence that microorganisms, not food, were the cause of the diseases that killed most people at that time. Ironically, spoiled food and contaminated water were discovered to be the major vectors for the microorganisms causing diarrheal diseases, one of the major causes of death in children until 1920. During the eighteenth and nineteenth centuries, the biological and medical sciences discovered that internal therapies for disease were most effective when highly focused and specific to the chemical and biochemical nature of the cell or invading organism. The food-based medical treatments advocated by Hippocrates, Galen, and Ibn Sinã were far too general to be effective against the specificity of disease. Rise of nutrition as biological science and decline of food as medicine Western medicine's reliance on drugs as the treatment of choice for disease, which began in the late nineteenth century, brought about a shift in how scientists approached the study of food. In the early part of the twentieth century, the physical and biological rather than the medical properties of food became the focus of research, and nutrition was added to the growing list of biological sciences. With this new biological focus, it became apparent that the nutrients in foods function primarily to support metabolism for growth, reproduction, and maintenance of bodily functions. It also became clear that individual foods or nutrients do not cause or cure the infectious diseases that still caused the majority of deaths. Not until the 1950s, when a significant number of people were living beyond 60 years of age, did the link between nutrition and disease again become an area of interest. Unlike in the preceding 5,000 years, scientists now applied the scientific method to determining whether individual foods or nutrients caused disease. To this end, between 1950 and 1980, several epidemiological studies reported that individual foods and nutrients such as dietary cholesterol, saturated fat, red meat, and so forth, were associated with a higher risk of heart disease and cancer. Conversely, people consuming diets low in fat and rich in antioxidants had a lower risk of heart disease. The epidemiological evidence was often corroborated by results in laboratory animals and in small and short-term human trials. The short-term human studies did not, however, measure actual health outcomes, of cancer rates or heart disease, for example. Rather, they evaluated some biological marker that may be related to the disease outcome. For example, shortterm human studies of the effect of vitamin E on cancer would often report the level of antioxidants in the blood after a few months of taking a supplement, rather than the actual rate of cancer in the population. Together, the results from epidemiological, laboratory, and small-scale human studies led to a frenzy of recommendations by governments, independent nutrition organizations, and the food industry suggesting that people should increase or decrease their intake of a specific food or individual nutrient in order to lower their risk of disease. Antioxidants prevent cancer! No, wait, they increase risk of cancer! Unfortunately, conclusions about the medical and health benefits of an individual food or nutrient are often premature and reflect the failure of both scientists and the lay press to wait until completion of all the studies needed to conclude cause and effect. For example, during the 1970s and 1980s, many epidemiological studies reported that cancer rates were significantly lower in people who consumed diets with high amounts of the antioxidants vitamin E (α-tocopherol) and β-carotene (precursor of vitamin A) than in individuals with diets low in these vitamins. Studies in laboratory animals showed that these vitamins, given in the diet at levels several times the animals' requirement, prevented the growth of tumors. Hundreds of human trials with numerically small populations corroborated the epidemiological and animal studies, describing changes in biological markers for cancer. Based on these results, food companies began to advertise the benefits of vitamin E and β-carotene on their product labels and in commercials, and sales of supplements skyrocketed. The dogma that vitamin E and β-carotene prevent cancer had entered the American culture. The dogma crash-landed in the 1990s, with the news that β-carotene and vitamin E were not showing any effect on cancer. In 1994, a cancer-prevention study of Finnish male smokers demonstrated that β-carotene not only did not prevent lung cancer in men who smoked but may actually increase the risk of cancer (Figure 10.2). The results of the study drew substantial criticism, because it focused on smokers rather than on otherwise risk-free individuals. Subsequent trials performed in nonsmokers confirmed the initial findings and failed to demonstrate any effect of vitamin E or β-carotene on mortality rate. At about the same time, longevity studies in mice also found that neither cancer rates nor life span were altered by increasing the vitamin E content in the diet. The results of these investigations prompted many government agencies and the American Cancer Society to issue warnings over the safety of β-carotene and to unequivocally state that β-carotene does not reduce the risk of cancer or increase the length of life. Figure 10.2 Studies of the effects of vitamin E and β-carotene on lung cancer rates. The data show the cumulative incidence of lung cancer in study participants receiving α-tocopherol, or vitamin E (AT), β-carotene (BC), α-tocopherol and β-carotene (AT + BC), or a placebo. (Data from Albanes D et al. 1996. J Natl Cancer Inst 88:1560–1570. With permission from Oxford University Press.)

Basic nutritional advice has been right all along Clearly, not all foods and nutrients have been tested to the degree of β-carotene, vitamin E, or other nutrients now shown to have no effect on the risk of disease. It would be irresponsible to suggest that no individual food or nutrient could, at some point, be shown to have a specific effect on a disease. Nonetheless, nutritionists, public health experts, and other health professionals are starting to gain a clearer picture of the effect of food on disease. Their conclusion, after the millions of dollars spent on researching the health benefits of food, is what nutritionists have been saying for years: eat your vegetables, eat everything in moderation, and eat a wide variety of foods. |

The life-extending properties of calorie restriction have been effective in virtually all nonhuman species tested, including yeast, worms, flies, and rodents. Only a few genetically altered species do not respond to CR. Although the life-extending properties of CR in nonhuman primates remain controversial, there exists overwhelming evidence that CR delays the onset of disease and slows the rate of aging in rhesus monkeys. The current data, particularly those on nonhuman primates, strongly suggest that reducing caloric intake may be one mechanism for modulating the rate of aging, increasing longevity in humans, or both. In this section, we explore the use of CR as a method to modulate longevity and the possible effectiveness of this dietary intervention in extending life span and improving health in humans.

Calorie restriction increases life span and slows rate of aging in rodents

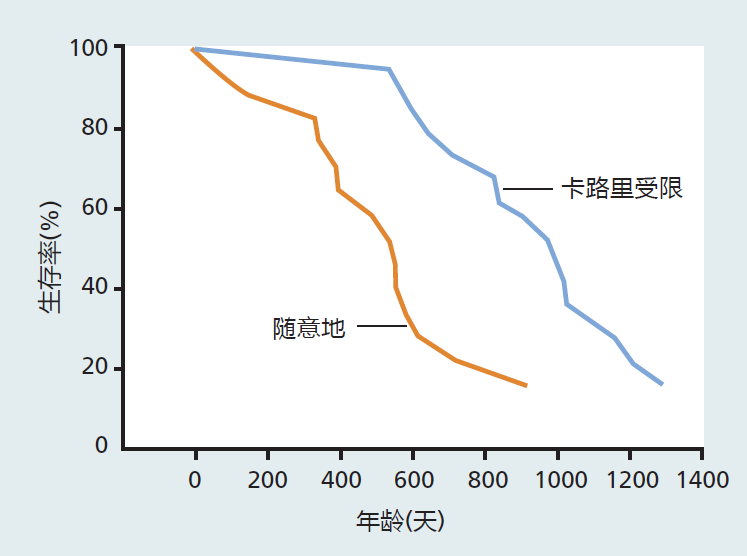

A publication by McCay, Crowell, and Maynard in 1935 described, for the first time, an intervention that led to increased life span. As shown in Figure 10.3, these investigators found that reducing rats' caloric intake prolonged mean and maximum life span, when compared with rats allowed to consume as many calories as they wished (known as ad libitum feeding). The uniqueness of this experiment compared with previous investigations was that the investigators reduced only the caloric content of the food without altering the nutrient composition—that is, vitamins and minerals—needed to prevent deficiencies. Previous investigations had demonstrated an increased life span by food, rather than calorie, restriction. While longer life was achieved by food restriction, many animals died early in the life span due to nutrient deficiencies. Thus, McCay and colleagues showed that calorie restriction increased life span, whereas food restriction reflected only the fact that hardy animals capable of surviving nutritional deficiencies lived longer—that is, it reflected selective mortality.

Figure 10.3 Life span of non-calorie-restricted (feeding ad libitum) versus 40% calorie-restricted male rats. These data, published by McCay and colleagues in 1935, were the first to show that calorie restriction without malnutrition increased life span in rodents. (Data from McCay CM et al. 1935. J Nutr 10:63–79. With permission from the American Society for Nutrition.)

In the experiments by McCay and his colleagues, as well as numerous others, the CR was begun at or near weaning (about 3 weeks of age in mice and rats). As a result, calorie-restricted mice and rats were smaller in both weight and size than ad libitum–fed animals. This observation led most researchers to conclude that retarded growth and development was the mechanism underlying the life-extending proprieties of CR. It was not until the early 1980s that experiments showed that calorie restriction begun as late as 18 months of age in previously ad libitum–fed mice and rats—that is, in fully grown animals—increased mean and maximum life span.

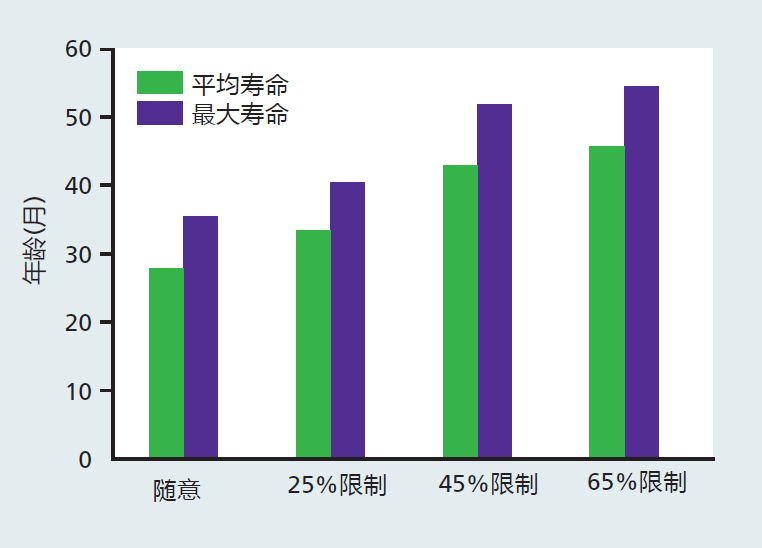

The level of CR used in most experiments has been rather severe, at about 60%–70% of ad libitum feeding. These values were experimentally established as the level that provided maximum life extension without early life mortality due to starvation. The use of such levels of CR has been criticized, because it is unlikely that a 40% restriction could be achieved in humans (see the discussion on the effectiveness of CR in humans later in this section). To answer this criticism, researchers conducted life-span experiments that tested different levels of calorie restriction. The data from these studies showed that extension of life increases as caloric intake decreases (Figure 10.4). Other data have shown that a reduction in calories of only 5%–10% can increase life span, although only very modestly. The results from these experiments indicate that calorie restriction at levels appropriate for humans could extend the life span.

Figure 10.4 Mean and maximum life spans of male rats at different levels of calorie restriction. Note that the differences in mean and maximum life span between 25% and 45% CR are considerably larger than those between 45% and 65% CR. In fact, the researchers found that many rats died due to starvation in the 65% CR group, and they suggested that the degree of restriction should be no more than 50%. Nonetheless, these data show that life span increases as the degree of CR increases. (Data from Weindruch R et al. 1986. J Nutr 116:641–654. With permission from the American Society for Nutrition.)

The CR procedures used today involve diets that ensure sufficient levels of vitamins and minerals to prevent nutritional deficiencies and malnutrition. Moreover, numerous experiments have shown that increasing the concentration of vitamins and minerals above the amount needed to prevent deficiencies does not increase life span. Together, the prevention of nutrient deficiency and the lack of effect with further supplementation strongly suggest that calories, rather than individual nutrients, are the underlying mechanism for the life-span extension observed under calorie restriction.

Calories are provided by the three macronutrients: proteins, fats (lipids), and carbohydrates (starches and sugars). To reduce the caloric content of the diet, the concentrations of these three macronutrients must be altered. Since these macronutrients have other functions in the body in addition to supplying calories, it is possible that the altered concentrations of proteins, fats, and carbohydrates, or some combination of the three, may be the factor leading to life extension in CR. However, several CR studies using various levels of macronutrients have shown that, in general, changes in calories, not in macronutrients, are responsible for the life extension in rodents.

Although the mechanism underlying the life-extending effect of CR in rodents has yet to be determined, virtually every age-related decline in a physiological system is delayed or slowed when compared with findings in ad libitum–fed animals. This includes metabolic rate, neuroendocrine changes, glucose regulation, thermoregulation, immune response, and circadian rhythms. The slowing of the rate of aging observed in CR can be correlated with a reduction in cellular oxidative stress and other processes that lead to damaged proteins. One of the more interesting and consistent findings from CR studies in rodents is that various chaperone proteins are upregulated. Chaperone proteins mark misfolded or damaged proteins for catabolism and removal from the cell. Thus, a reduction in the accumulation of damage may be one mechanism that slows the rate of aging during CR.

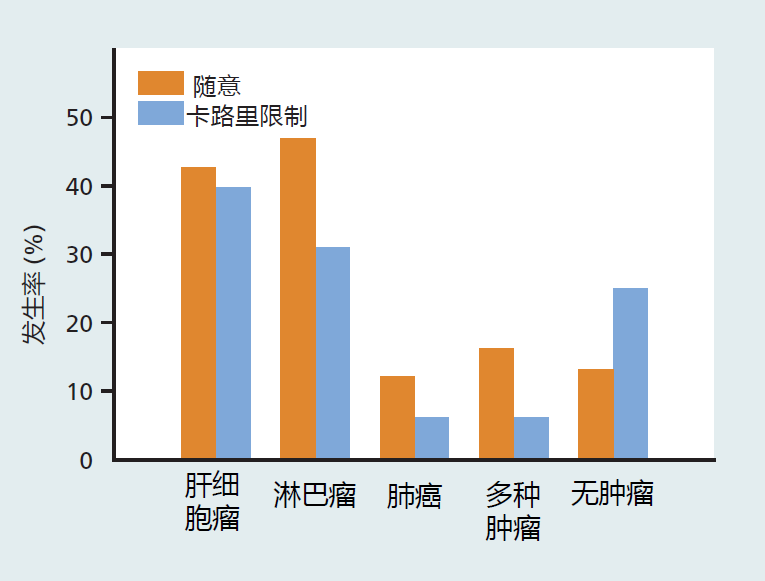

Calorie restriction also delays or prevents the appearance of many age-related diseases. In calorie-restricted rats, the incidence of glomerulonephritis, a disease leading to renal failure and the most common cause of natural death in rats, is about 50% of the rate observed in ad libitum–fed animals. In addition, glomerulonephritis in calorie-restricted rats occurs significantly later in the life span. The occurrence of cancer and number of tumors are lower in calorie-restricted than in ad libitum–fed mice (Figure 10.5).

Figure 10.5 The incidence of cancer in mice at the time of death, following long-term calorie restriction versus ad libitum feeding. The CR was started at 12 months of age. Thus, CR can have a significant impact on age-related disease even when started in middle age. (Data from Weindruch R, Walford RL. 1982. Science 215:1415–1418. With permission from AAAS.)

Calorie restriction in simple organisms used to investigate genetic and molecular mechanisms

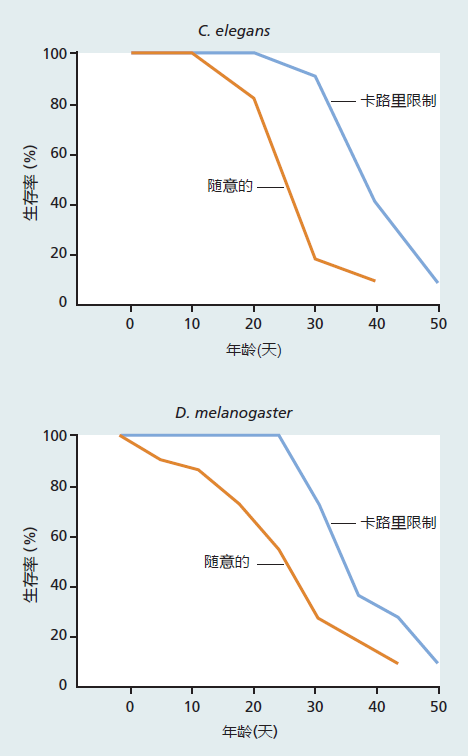

The vast majority of investigations using CR have been carried out in mice and rats, laboratory models that present significance challenges to the study of genetic and molecular mechanism. Thus, biogerontologists have turned to less complex organisms such as yeast, worms, and flies to better understand the genetic and molecular mechanisms underlying life extension through CR (Figure 10.6). Most results have been consistent with our current understanding of the evolutionary and genetic bases of longevity. Extension of life span by CR in Caenorhabditis elegans and Drosophila has been associated with a delay in the start of reproduction.

In addition, fecundity in calorie-restricted worms and flies decreases significantly compared with that in ad libitum–fed animals. These findings are exactly what would be predicted based on mathematical models and experimental evidence of the trade-off between reproductive success and longevity that we discussed in Chapter 3.

Figure 10.6 Effect of calorie restriction in C. elegans and Drosophila. Calorie restriction in C. elegans is achieved by reducing the concentration of E. coli in the culture medium, in this case to 50% of that given to the ad libitum–fed worms. Calorie-restricted adult Drosophila are fed a sucrose/yeast solution at 50% of the concentration given to ad libitum–fed flies.

Thus far, the results in yeast, worms, and flies are consistent with the genetic mechanisms that affect life span as discussed in Chapter 5. Life extension in yeast undergoing CR seems to be associated with longevity genes involved in aerobic metabolism, that is, the SIR genes. In fact, CR did not further increase the extension of life span in mice engineered to overexpress SIR2. Much more research on simple organisms is needed to determine the underlying mechanisms of CR.

Calorie restriction in nonhuman primates may delay age-related disease

Two separate calorie-restriction trials in rhesus macaque monkeys, started during the 1980s, are now complete. One study reported that both mean and maximal life span are increased significantly in the calorie-restricted monkeys, although the difference in maximal life span was small compared to differences in other species. In the other study, only mean life span of the CR monkeys was significantly greater as compared to the ad libitum group.

Notwithstanding the lack of a definitive conclusion on the longevity question, CR in rhesus macaques seems to have positive effects on markers of age-related disease and time-dependent functional loss. As would be expected, calorie-restricted monkeys weigh significantly less than ad libitum–fed animals. The difference in weight reflects a decrease in both fat and lean mass. In other words, the animals were smaller but proportional for their size. In addition, fasting blood insulin and glucose levels decrease and insulin sensitivity increases in CR. Combined with the lower total fat mass, these data indicate that calorie-restricted monkeys are at a lower risk than ad libitum–fed monkeys for developing type 2 diabetes. The lower serum triglycerides, LDL cholesterol, and blood pressure observed in the calorie-restricted animals are consistent with a decreased risk of cardiovascular disease. Effects on time-dependent functional loss in CR versus ad libitum–fed monkeys were suggested by a delay in the appearance of sarcopenia, hearing loss, and brain atrophy in several subcortical regions.

Effectiveness of calorie restriction to extend life span in humans remains unknown and controversial

The positive health effects of low-calorie diets for overweight and obese individuals are well known. What remains less well known is if reduced-calorie diets, CR, or both in normal-weight, healthy individuals result in the same improvement to known risk factors for chronic disease as that seen in rodents, nonhuman primates, and obese individuals. A few short- term, CR studies in normal-weight individuals suggest a positive effect, but these investigations have been fraught with difficulties that lessen the impact of the results. High dropout rates, small sample sizes, and problems with participants adhering to the strict dietary protocol required to achieve the appropriate level of CR have led many biogerontologists to question whether CR in humans is feasible.

Many of the issues and problems surrounding the efficacy of human CR interventions have recently been evaluated in the largest and longest longitudinal human CR trial. This investigation followed a 2-year period of CR in 143 young and old, male and female, nonobese, healthy subjects. Each individual self-selected a diet intended to reach a goal of a 25% reduction in calories from their pre-CR baseline intake. All subjects received intensive psychological and nutritional support throughout the investigation to help with compliance and ensure nutrient requirements were met. The 25% reduction selected for this study reflected an amount close to that used in rodent and nonhuman primate investigation that resulted in metabolic and hormonal changes associated with extended life span. A 25% reduction in calories in the human CR group represented about 600 kcal per day.

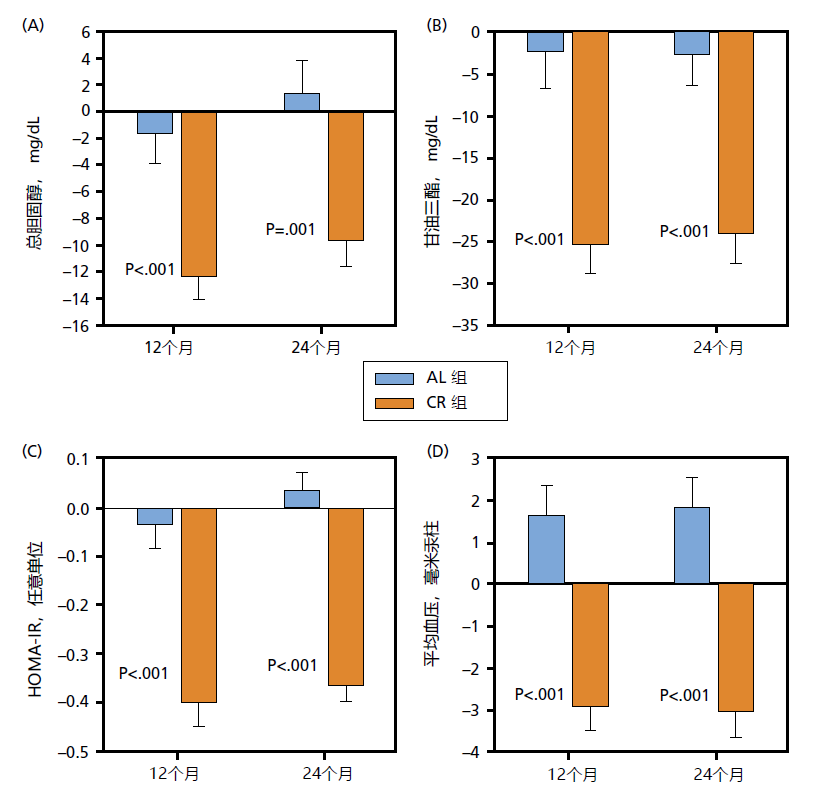

The average reduction in calories during the first 6 months was 19% (475 kcal/day), 6% lower than the goal. Over the next 18 months of the 2-year study, subjects were only able to achieve an average reduction of 9% (250 kcals/day) from their baseline values. Many of the subjects returned to calorie intake amounts close to baseline value before the 2-year trial had ended. The 2-year CR trial resulted in health outcomes expected from a moderate weight loss/low-calorie diet. That is, those on the CR protocol experienced significant loss in body weight (10%) and fat mass (26%) while maintaining fat-free mass. Significant improvements to known risk factors for both cardiovascular disease and type 2 diabetes were also observed (Figure 10.7).

Figure 10.7 Change in serum total cholesterol (A), serum triglycerides (B), insulin resistance (C), and mean blood pressure (D) following two years of ad libitum (AL, n = 75) and calorie restriction feeding in mean and women (~250–400 kcal/day, n = 143). HOMA-IR (homeostatic model assessment for insulin resistance) is an algorithm used to estimate insulin resistance from resting levels of blood glucose and insulin. Insulin resistance is a biomarker for Type II diabetes. In addition to the cardiovascular risk factors shown here, LDL-cholesterol decreased and HDL-cholesterol increased after 2 years in the CR group. It is generally accepted that a decrease in total cholesterol, serum triglycerides, LDL-cholesterol, and mean blood pressure and an increase in HDL-cholesterol reduces the risk of heart disease. Decreasing HOMA-IR suggests a reduced risk of Type II diabetes. (Taken from Ravussin E et al. 2015. J Gerontol A Biol Sci Med Sci. 70:1097–1104. FIGURE 4. With permission from The Gerontological Society of America and Oxford University Press.)

In summary, the human CR trial was unable to evaluate whether a reduction in calories to the level associated with life extension in rodents and nonhuman primates was also effective in humans. That is, the human CR trial demonstrated that maintaining a severe reduction in calories over an extended period will be challenging for the general population. The results of this human CR trial did confirm the conclusions of previous investigations that a moderate weight loss/low-calorie diet significantly reduces many risk factors associated with time-dependent chronic disease.